Yesterday, there was a small earthquake in Melbourne. Within a few minutes, the Geoscience Australia web-page was delivering 503 Errors, quite possibly due to the load.

This blog post is just a few quick thoughts about building websites to handle load.

Why websites crash

Websites stop working under heavy load because the server doesn’t have enough resources to process everything. This is usually one of:

- Bandwidth saturation, indicated by timeouts and super slow load times.

- Webserver or cache overload, indicated by refused connections or server (5XX) errors.

- Database server overload, indicated by server errors.

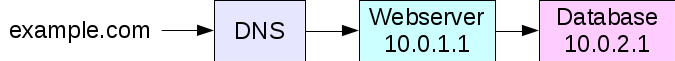

A simple database-driven website might process a request like this:

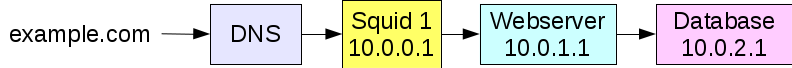

Set up a squid cache

For sites which uses a database to serve requests, a good cache setup is essential. This is another server (or server process), which serves pages that aren’t changing. A page only needs to be generated as often as it changes:

squid is a good open source starting point if you are administering a server which struggles under load.

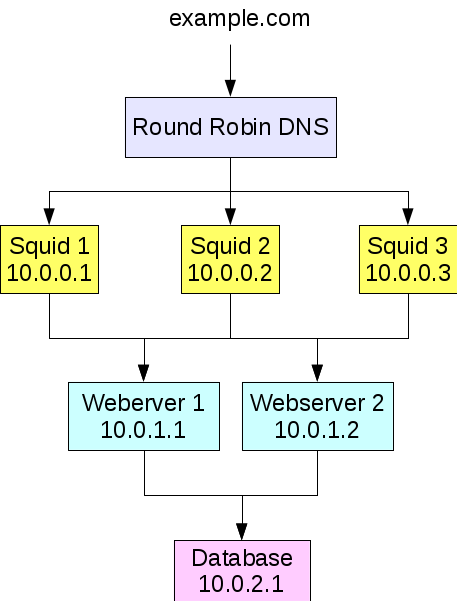

Round-robin DNS

Without running a hardware load-balancer (read: spending real money), you can have clients connect to different servers by using round-robin DNS.

Each time a DNS lookup is issued, a different address can be returned, allowing you to have several caches at work.