As of April, there is now a qjoypad package available in the official Debian repository, see https://packages.debian.org/unstable/main/qjoypad. This program allows you to map joystick events to keyboard and mouse actions. This means that you could use, for example, a USB gamepad to browse the web.

I have previously blogged about the lack of usable, packaged programs to do this, and highly recommend getting your hands on a USB gamepad and giving this a shot. Depending on your distribution, you will soon be able to just run:

sudo apt-get install qjoypadThen connect the gamepad and type:

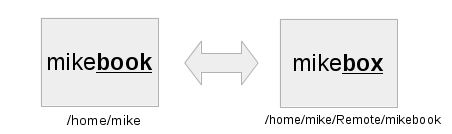

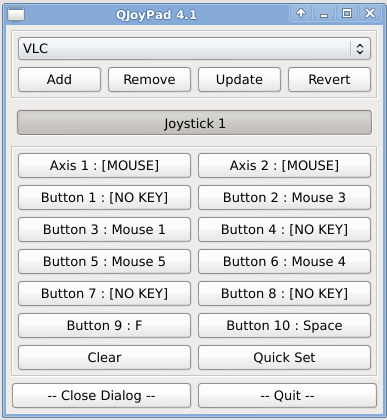

qjoypadYou then get a window which shows you what buttons you can map:

Clicking a button prompts you to press a key on your keyboard or select a mouse button, and each profile can be saved and named for later use.

As far as small utility programs go, I now rely on this about as much as the Gnome calculator.

An alternative: AntiMicro

I’ll also give a mention to AntiMicro, which has similar goals, and looks very promising.

As a bit of background, there is a limitation in QJoyPad around adding modifier keys: For example, you simply can’t map a joystick button to <Ctrl>+Q, making it inefficient to use with most applications. The author of AntiMicro, Travis, sent me an email back in September to point out that his program both solves this issue, and has a Debian package available for download.

Whilst I was quite impressed with the functionality in AntiMicro, I found it a whole lot more complex to set up. I think this would be more useful for gamers, as mouse acceleration and key repeats are overkill for my use case (browsing the web and controlling VLC, MythTV, etc).

So, if you are a power user, then I suggest you give it a shot. Power users with apt-get may also consider joy2key, which is the best non-GUI tool for this, and is available in the Debian repositories.